Use the ServiceNow Platform to Operationalize Key Requirements of the EU AI Act

The EU Artificial Intelligence Act (EU AI Act) is the world’s first comprehensive legal framework for artificial intelligence. The final version was adopted on 13 June 2024 and published in the Official Journal of the EU on 12 July 2024. The ordinance has been in force since August 1, 2024, and most of the requirements will be binding from 2026. The EU AI Act defines strict requirements for AI systems – from governance and risk management to transparency and documentation obligations to ongoing monitoring. Violations can be punished with severe penalties, including fines of up to 7% of global annual turnover. A wide variety of responsible persons – e.g., Chief Information Officers, Chief Information Security Officers, Heads of AI, Chief Compliance Officer, Chief Risk Officer and Chief Audit Executives are now faced with the challenge of implementing these requirements in practice and continuously reviewing them. Accordingly, it is important for companies to deal with the implementation of the EU AI Act at an early stage and to integrate it into their governance and compliance structures.

“The successful implementation of the EU AI Act is more than just the fulfilment of regulatory obligations, it is a strategic opportunity to anchor AI sustainably and securely in companies.” Sebastian Mayer, Managing Director, Protiviti Germany

This white paper summarizes the central requirements of the EU AI Act and shows in a practical way how companies can implement them in practice with the help of the ServiceNow platform. The focus is on four use cases, from building an AI inventory to automated monitoring, which illustrates how ServiceNow efficiently supports compliance with the EU AI Act.

Key requirements of the EU AI Act

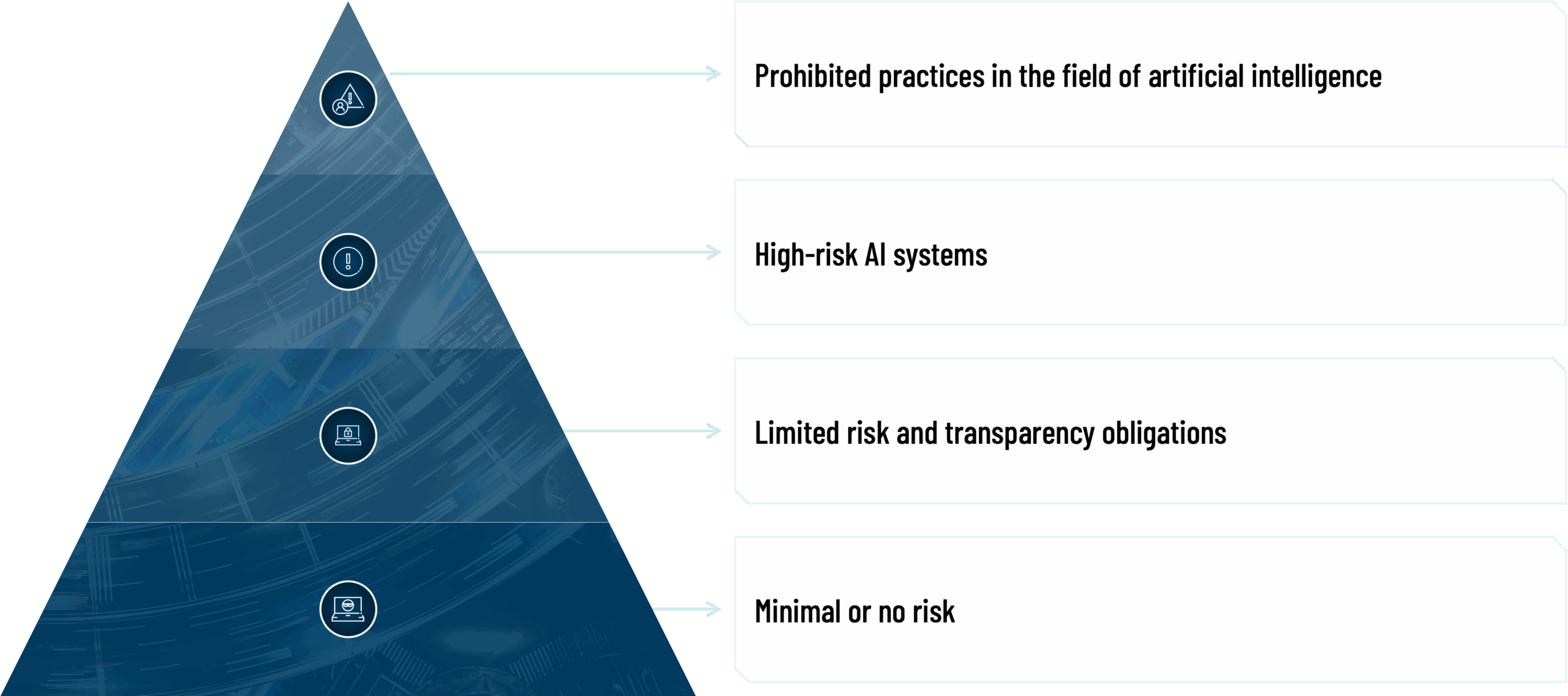

The EU AI Act pursues a risk-based approach and establishes differentiated obligations, depending on the hazard potential of an AI system. In particular, the EU AI Act defines extensive requirements in the areas of governance, risk management, transparency, risk classification and monitoring. Companies need to understand these and transfer them into their governance structures in order to act in accordance with the rules. The most important requirements are summarized below.

Risk classification and prohibited AI practices

Figure 1: The four risk levels of the EU AI Act.

The EU AI Act divides AI applications into four risk levels: unacceptable risk, high risk, limited risk and minimal risk.

- Prohibited practices in the field of artificial intelligence: The regulation names prohibited AI applications that are considered a clear threat to security or fundamental rights. Examples include manipulative AI systems that are intended to control people unconsciously, the exploitation of weaknesses of vulnerable groups (e.g., children), any form of state social scoring, and certain prognostic AI for police risk assessment. Also prohibited are, for example, the indiscriminate extraction (automatic or manual) of data from websites of biometric data from the Internet for facial recognition, the biometric identification of people in real time in public spaces for law enforcement purposes (with narrowly limited exceptions), discriminatory emotion recognition in the workplace or AI to determine protected characteristics (such as religion) through biometric analysis. Companies must ensure that such AI practices and functionalities do not occur in any system they operate or use.

- High-risk AI systems: The category of high-risk systems includes AI systems that have the potential to have a significant impact on the life, health or fundamental rights of people. The legislator mentions various areas of application here, including safety-critical infrastructure (e.g., AI control in the transport sector), education and personnel decisions (e.g. software for applicant selection), access to essential services (e.g. AI-supported creditworthiness checks), police and judicial applications (e.g. evidence assessment) or AI in medicine. Such high-risk AI systems are not prohibited per se but are subject to strict conditions before they can be placed on the market or put into operation.

- Limited risk and transparency obligations: This includes AI applications that do not pose a high risk but still entail certain transparency obligations. In particular, the use of AI must be disclosed to users in such systems. A typical example is chatbots or virtual assistants – users need to be informed that they are interacting with a machine. The EU AI Act also stipulates that AI-generated content must be recognizable as such for generative AI (such as models that generate text or images), e.g. by labeling synthetic media or labeling “AI-generated”. So-called deepfakes – i.e. AI-generated content that simulates realistic human representation – must also be clearly and visibly labelled as artificial. These transparency requirements are intended to prevent people from unknowingly believing AI results.

- Minimal or no risk: A large number of everyday AI applications (e.g. spam filters, AI in video games) fall into the category of minimal risk or are classified as completely non-risky. The EU AI Act does not provide for any additional requirements for such systems.

Companies must, therefore, first take a holistic inventory of their AI portfolio in order to be able to classify AI applications according to these categories. In particular, it is necessary to identify which existing or planned AI systems must be classified as high-risk AI systems, as the extensive obligations described below apply to them.

Requirements for high-risk AI systems

For high-risk AI systems, the EU AI Act prescribes a robust internal control system. Providers (developers or distributors) of such AI systems must establish a systematic risk management system that covers the entire life cycle of AI. Risks must be identified, analyzed and mitigated on an ongoing basis, from planning to development and testing to the ongoing operation of an AI system. Specifically, the EU AI Act sets various requirements for high-risk AI systems in this context (Chapter 2 EU AI Act) and requires the providers and users of high-risk AI systems to comply with various obligations:

- Risk management system (Art. 9 EU AI Act): Providers of high-risk AI systems must establish a continuous risk management process that ensures the systematic identification and analysis as well as the mitigation of potential risks from the use of the AI system for health, safety and fundamental rights enshrined in the Charter of the European Union, including democracy, the rule of law, and environmental protection. Risk management spans the entire life cycle of the AI system, from planning to testing to ongoing operations, and must be updated regularly, as risks can change during the ongoing operation of the AI application.

- Data and data governance (Art. 10 EU AI Act): Extensive quality criteria must be considered for the use of data for training, validation and testing purposes. For example, procedures are established so that the data sets used are as relevant, representative, error-free and complete as possible in order to minimize the likelihood of distortions or errors in the execution of an AI system. If necessary, dataset checks and pre-treatments (e.g., cleansing or bias checks) must be carried out in order to comply with the requirements of the EU AI Act. The EU AI Act also explicitly calls for measures to avoid discriminatory results due to inadequate data. These measures are intended to ensure that AI systems work reliably and equitably without systematically disadvantaging certain groups.

- Technical documentation (Art. 11 and 18 EU AI Act): Providers of high-risk AI must prepare detailed technical documentation (see also Annex IV EU AI Act). Among other things, the system purpose, functionality, model architecture, training data, performance metrics, and all measures taken are documented. This documentation must enable third parties (e.g., the notifying authority) to understand the system and check for compliance. The technical documentation must be retained for a period of at least ten years from the time the high-risk AI system is placed on the market or put into service.

- Record-keeping obligations (Art. 12 & Art. 19 EU AI Act): High-risk AI systems must be designed in such a way that relevant events are automatically logged. Decisions or usage processes should be recorded in a comprehensible way. The logs must be kept by providers and operators for a reasonable period, but at least six months, to be able to investigate, for example, the cause of wrong decisions.

- Transparency and provision of information to operators (Art. 13 EU AI Act): High-risk AI must be designed and developed in such a way that its operation is sufficiently transparent for operators to be able to correctly interpret the output and use it appropriately. Providers must provide understandable operating instructions for use for each high-risk AI. These include information on the purpose and functionality of the system, its performance (e.g., accuracy metrics, robustness, safety measures), known limitations and possible risks, and guidance on how to use it correctly. This is to ensure users can understand the outputs of an AI system and avoid misuse.

- Human supervision (Art. 14 EU AI Act): During development, it must be considered that high-risk AI systems can be monitored and controlled by humans through appropriate measures (“human oversight”). For example, AI should provide alert indicators or interfaces so that human supervisors can intervene if the system reacts incorrectly or produces undesirable results. The technical precautions support the operator in practically implementing the human oversight measures envisaged by the provider, such as the possibility of overriding automated recommendations or switching off the system if necessary.

- Accuracy, robustness, and cybersecurity (Art. 15 EU AI Act): High-risk AI systems must operate with reasonable accuracy, perform consistently, and be robust against disruptions, errors, or misuse. This applies to all intended operating conditions over the entire life cycle. Where necessary, technical redundancies (e.g. failsafe modes or back-up systems) must be provided to absorb safety-critical failures. In addition, providers must take appropriate cybersecurity measures to ensure that the AI system is protected against manipulation or attacks. Known attack patterns such as data poisoning (targeted manipulation of training data), model manipulation (targeted negative modification of the AI model itself) or adversarial inputs (deliberately manipulated input data) are to be proactively prevented, detected and fended off. Self-learning AI models must also be designed in such a way that negative feedback loops (e.g., progressive bias caused by one’s own erroneous outputs) are avoided or limited by correction mechanisms.

- Quality management system (Art. 17 EU AI Act): Providers of high-risk AI systems must implement an effective quality management system (QMS). This QMS ensures that all the above requirements and processes are systematically adhered to and documented. This includes clear procedures for design controls, testing, validation, and quality assurance of the AI system, as well as change management measures in case subsequent adjustments are made to the system. The QMS must also ensure the company’s responsibilities are clearly regulated and that personnel are adequately trained. The documentation of the QMS must be retained for a period of at least ten years from the time the high-risk AI system is placed on the market or put into service.

Beyond internal requirements, the provision of high-risk AI is subject to further regulatory scrutiny. For example, a conformity assessment must be carried out before placing on the market (including Art. 6 para. 1 b) EU AI Act, if necessary, with the involvement of an independent notified body, if no harmonized standards or specific exceptions apply). In addition, high-risk AI systems must be registered in an EU database before they can be used. This registration (Art. 49 EU AI Act) collects core information about the system (including the provider, purpose, classification, etc.) and serves to ensure transparency vis-à-vis authorities and the public. To this end, the EU Commission operates a central database for high-risk AI in accordance with Art. 71 EU AI Act. These additional formal obligations, including possible involvement of notified bodies, CE marking, declaration of conformity and registration, ensure that high-risk AI only enters the market in a demonstrably compliant and officially traceable manner.

Transparency obligations for providers and operators of certain AI systems (Art. 50 EU AI Act)

In addition to the regulations for high-risk AI, the EU AI Act also defines transparency obligations for certain other AI applications that do not pose a high risk but are of particular importance in the everyday lives of users. These requirements are primarily intended to ensure that people recognize when they are dealing with AI or consuming AI-generated content. The following are particularly affected:

- AI that interacts with humans (e.g., chatbots): If an AI system is intended to interact directly with natural persons, the provider must ensure that the data subjects are informed that it is AI. In practice, this means that chatbots, virtual assistants, or similar systems reveal themselves to the user as an AI system, unless this is obvious from the circumstances anyway.

- Generative AI for synthetic content: Providers of AI systems that generate synthetic or manipulated content (text, image, audio, video) must take technical precautions to ensure that the artificially generated content is recognizable. In practice, such content should be provided with permanently recognizable notices or watermarks (machine-readable and perceptible to humans) that indicate, for example, that an image or text has been artificially created. No labeling is necessary if the AI system only provides editorial support, such as autocorrection or image filters, and does not significantly change human input.

- Emotion recognition and biometric categorization: If an actor uses an AI system to recognize emotions or biometric categorize people (for example, systems that infer states of mind from facial expressions or assign people to specific demographic groups), the operator must ensure that the persons concerned are informed about this.

- Deepfakes: Anyone who uses AI systems to create or distribute realistic-looking artificial media content (so-called deepfakes) must make it clear that the content is artificially generated or manipulated. For example, an AI-generated video should carry a hint that it’s not real. This applies in particular if such content serves to form public opinion. Here it must be disclosed that the text or material was artificially created.

These transparency requirements for AI applications with limited risk are intended to strengthen user trust and prevent abuse without unnecessarily inhibiting the ability to innovate. Based on these specifications, users know when they are communicating with an AI and can better assess content.

General purpose AI models

Another important aspect of the EU AI Act is special requirements for AI models with a so-called general-purpose AI, especially those that are widely used as basic models. These include, for example, large language models or multimodal models that have not been developed for a single specific purpose but can be used in different areas. The Regulation recognizes that such models are re-used by many downstream providers and can potentially have a broad impact.

The key obligations for providers of general AI models are:

- Technical documentation (Art. 53 para. 1 EU AI Act): The provider must prepare comprehensive technical documentation of the model and keep it up to date. Among other things, this will provide insights into the training and testing process, the model architecture, performance evaluations and protective measures taken. The aim is for relevant authorities to be able to request the documentation if necessary and to check whether the model complies with the regulations.

- Disclosure of information to downstream users (Art. 53 para. 2 EU AI Act): General AI models are often integrated by third parties to develop specific AI systems from them. Therefore, the model provider must provide sufficient information and documentation for these “downstream” providers. Specifically, developers who continue to use the model are to be informed about the model’s performance capabilities, limitations, recommended areas of application, and known risks. This allows them to meet their own obligations and assess whether the model is suitable for a planned high-risk application.

- Copyright compliance (Art. 53 para. 3 EU AI Act): It must be ensured that the model respects applicable copyrights. In particular, the provider must ensure, including by technical means, that it does not use data that is subject to a “machine learning ban”. The development of general AI models must therefore not go beyond applicable copyright regulations.

- Disclosure of training material (Art. 53 para. 4 EU AI Act): Providers must publish a summary of the content used in the training. This report (in a format specified by the Office of Artificial Intelligence) is intended to show in an understandable way which data sources were used for model training.

- Requirements for foreign providers (Art. 54 EU AI Act): Providers of general AI models that are not based in the EU and market their model in the EU must first appoint an authorized representative established in the EU. This represents the provider vis-à-vis supervisory authorities and ensures that, for example, technical documentation is kept available. This is intended to make it easier to enforce the rules with providers outside Europe.

General AI models, due to their reach and performance, can entail systemic risks, i.e., possible large-scale negative impacts on important areas (such as critical infrastructure, democratic processes or society as a whole). The EU AI Act introduces the category “General AI models with systemic risk” (Art. 51 EU AI Act), which includes a model if it has capabilities with a high degree of effectiveness (measured by criteria in Annex XIII) and thus has a correspondingly high potential for abuse or harm (e.g. large and widely used language models, potentially including GPT-4 or Claude). In addition, it is assumed that a general AI model with systemic risk exists if the calculations used for training, measured in floating-point operations (calculations with decimal numbers that show how much computing power an AI system consumes, for example when calculating neural networks), is more than 1025. The following additional requirements apply to such models (Art. 55 EU AI Act):

- The provider must carry out stricter evaluations of the model, including attack tests, in order to identify vulnerabilities or opportunities for abuse at an early stage. The test results and any countermeasures taken must be documented.

- Targeted risk mitigation measures must be implemented to address any system-wide risks at EU level. The provider must therefore assess what negative effects their model could have at Union level (e.g., for information security or public debate) and take appropriate precautions to limit these risks.

- The provider must record and document serious incidents and corrective actions taken and report them immediately to the supervisory authorities (the Artificial Intelligence Office and, where applicable, national authorities).

- It is necessary to ensure an appropriate level of cybersecurity for both the AI model and the physical infrastructure. This includes, for example: the protection of the AI model itself and the associated infrastructure from attacks, manipulation or espionage.

To facilitate compliance with these obligations, the regulation promotes the development of so-called practical guidelines for providers of general AI models (Art. 56 EU AI Act), which are industry-wide standards to establish an EU AI Act-compliant level of transparency, security, and risk management. Providers who adhere to such recognized codes can use them to prove their compliance.

Monitoring and reporting obligations

Even after an AI application has been placed on the market and put into operation, the responsibility of the stakeholders does not end. The EU AI Act prescribes continuous monitoring and proactive reporting obligations to ensure the ongoing security and compliance of AI systems. Key aspects here are:

- Post-market surveillance by providers (Art. 72 EU AI Act): Every provider of a high-risk AI system must set up a post-market surveillance system. Specifically, relevant data on the AI system performance, which can be provided by the providers or operators or collected from other sources, is to be actively and systematically collected, documented, and analysed over its entire service life. If the AI system interacts with other systems, this interaction must also be included. The monitoring system must be based on a structured plan (part of the technical documentation) and should be adapted to the system’s risk level (more extensive monitoring with higher risk).

- Obligation to report serious incidents (Article 73 EU AI Act): Despite all precautions, serious incidents or malfunctions can occur. The EU AI Act obliges high-risk AI providers to immediately report any serious incident to the market surveillance authority. For example, situations in which the AI system leads to a fatal or life-threatening event, significant economic damage, or serious violations of fundamental rights are considered “serious.” The notification should be made as soon as a causal relationship with the AI system is known or probable, at the latest within 15 days after the provider (or operator) has become aware of it. In particularly critical cases (e.g., in the event of death or a serious or irreversible disruption to the administration or operation of critical structures), shortened deadlines apply. Following the reporting of a serious incident, the provider must investigate it, conduct a risk assessment, and take corrective action.

“Successful risk management in the context of the EU AI Act requires consistent implementation and continuous monitoring. An integrated control system helps to effectively manage risks and identify them at an early stage.” Andrej Greindl, Managing Director, Protiviti Germany

The EU AI Act creates a comprehensive, risk-based framework to ensure responsible use of AI in the EU. Organizations that develop or deploy AI systems are now faced with the challenge of implementing a variety of new regulatory requirements in a structured manner, especially regarding risk management, data quality, transparency, human oversight, and continuous monitoring. While high-risk AI systems are subject to particularly strict requirements, the regulation also establishes clear rules for limited-risk applications as well as general-purpose AI models. These comprehensive requirements are ultimately intended to ensure that AI technologies are used in a trustworthy, secure, and socially accepted manner, while at the same time not weakening Europe’s innovative strength, but rather further strengthening trust in Europe as a business location.

Operational implementation of the EU AI Act with ServiceNow

However, many companies are asking themselves how the requirements described can now be implemented in a concrete, efficient and sustainable way. A best practice is to use an integrated governance, risk, and compliance platform. The following describes four relevant use cases of the EU AI Act on how organizations can implement the requirements of the EU AI Act with the extensive functionalities of the ServiceNow platform. The focus here is on the ServiceNow AI Control Tower, which serves as a central control instance for AI systems and ensures operational compliance in interaction with existing modules (e.g., IT Operations Management (ITOM), Integrated Risk Management (IRM) and Third Party Risk Management (TPRM)).

“The implementation of the EU AI Act shows that technological and regulatory requirements must go hand in hand. A central platform solution is crucial here to effectively bring both worlds together.” Kentaro Ellert, Associate Director, Protiviti Germany

Use case 1: AI inventory – central directory of all AI systems based on CSDM 5.0

In the course of the EU AI Act, companies must provide a complete overview of the AI systems and models they use. An AI inventory serves as a central register of all AI applications, including their intended purpose, risk classification, data sources, and responsibilities. This inventory is the basis for transparency and accountability, as only known and fully captured AI systems can be effectively monitored and controlled. In addition, a central directory makes it easier to comply with documentation obligations and reporting requirements from AI regulation (e.g., registration of high-risk AI systems with authorities).

The ServiceNow platform makes it possible to build up such an AI inventory through the AI Control Tower, for example using the new Common Service Data Model (CSDM) 5.0. Specifically, AI assets are stored in the Configuration Management Database (CMDB) and classified according to CSDM. CSDM 5.0 has introduced specific structural components for AI, such as AI System Digital Asset (for AI applications) and associated objects for AI models and AI datasets. This allows each AI system to be mapped as a configuration item with standardized attributes and relationships. In particular, AI systems can be directly assigned to business processes or associated (IT) services. In this way, AI is embedded in the core of business and IT services, so dependencies are transparent. The inventory is not just a technical list but is considered the business architecture; all AI components and their relationships to applications, services and data flows are recorded centrally. This forms the basis for understanding the impact of AI on business services and making strategic decisions based on facts.

Key features of AI inventory:

- ServiceNow AI Control Tower: Serves as a central platform for AI governance. All the company’s AI systems are recorded here (AI Asset Inventory) and stored with relevant information (intended use, responsible persons, risk classification), even if not developed within the ServiceNow platform. Through the AI stewardship concept, each AI model is assigned a responsible AI owner who is responsible for monitoring and sharing. In addition, approval workflows ensure that no AI-powered service goes live without thorough review and approval beforehand.

- CSDM-supported structure: Each AI system is modeled according to uniform standards in the CMDB and linked to business services. This ensures consistent data and context on business processes.

- Integration into the platform: The inventory is the backbone of the ServiceNow platform allowing the full integration of EU AI Act relevant governance processes, assessments, evaluations, control measures triggered by identified risk level of AI solutions.

- Lifecycle tracking: From planning to development to operation, the status of each AI solution is documented in a traceable way. Changes (e.g., new models or version updates) are trackable in inventory, which forms the basis for change management and continuous compliance checks. Full integration with other ServiceNow modules such as IT Service Management, security operations will provide additional relevant insights and enrich the AI Inventory to improve the governance of these solutions.

Use case 2: risk assessment and control

The work does not end with the commissioning of an AI, but rather the EU AI Act requires ongoing monitoring and quality assurance of AI systems. Every AI use case should be subjected to a standardized risk assessment before development. First, the risk profile of the AI system is determined, for example by classifying it into risk classes (e.g., minimal, limited, or high risk according to the EU AI Act) and identifying use-case-specific risks. On the basis of this classification, a catalogue of suitable risk mitigation measures is then derived. For example, high-risk AI systems require more extensive controls, such as stricter validation, detailed documentation, and human monitoring, while lower risks require more basic controls. It is important to take into account the company’s individual risk appetite, and if identified AI risks are not acceptable, a mechanism for project pause or adaptation must take effect. This means that before an AI model goes live, it should be clearly demonstrable what risks exist (e.g. bias, data protection, wrong decisions) and which controls have been implemented to mitigate these risks.

In addition to the AI inventory and approval workflows already described in the ServiceNow AI Control Tower, companies can implement the following ServiceNow functionalities:

- Risk management: ServiceNow’s risk management module is used to systematically record and assess risks for each AI application. Based on the EU AI Act, it can be determined, for example, which AI systems are considered high-risk and receive priority attention. Risks such as discriminatory results, data leaks, or model errors are documented in the risk register and subject to an assessment. Measures or controls can be directly assigned to identified risks. Thanks to the integration of risk management with the AI Control Tower, newly recorded AI risks are automatically assigned to the respective AI asset information in the Control Tower. This allows the governance team to keep track of the risk status of each AI in real time, from development to release.

- Policy and compliance management: The policy and compliance module makes it possible to define company-wide AI policies and controls and compare them with regulatory requirements. ServiceNow provides predefined content for this purpose, such as an AI risk and compliance content pack, which feeds requirements from important AI regulations (e.g., EU AI Act, NIST AI RMF) into the system as control targets. This allows a gap analysis to be carried out at an early stage: Which existing controls already meet the new AI requirements and where are there gaps? New or adapted controls are then stored in the system.

This combined solution makes it possible to apply a comprehensive AI governance framework even before an AI go-live. All relevant information and evidence (inventory, responsibilities, risk assessments, controls) is centrally available in ServiceNow, giving relevant stakeholders complete visibility and control over all AI initiatives, ensuring AI systems are compliant with internal policies and the requirements of the EU AI Act from the start.

Use case 3: continuous AI monitoring and compliance in operations

As soon as AI systems are in productive use, companies must continuously keep an eye on their performance and changes. Models themselves can change over time (e.g. through so-called model drift in self-learning systems), but the use case for an AI system also evolves, which can lead to changes to AI. The EU AI Act therefore stipulates that high-risk AI must be monitored on an ongoing basis and that it must be re-evaluated if new findings or changes are made. This means that the risk profile of an AI system and thus the verification of compliance and control requirements must not only be carried out once before commissioning, but over the entire life cycle. If incidents occur, such as wrong decisions by the AI or deviations from guidelines, these must be documented and rectified.

The following ServiceNow capabilities can make a significant contribution to this reactive AI governance during operation:

- AI governance workspace: As part of the AI Control Tower, ServiceNow provides a dedicated AI governance workspace. It offers a real-time overview of the status of AI models within the organization: compliance metrics, open risks, and test results per AI system are available at a glance. The workspace ensures that AI systems follow company policies and global regulations, with a particular focus on privacy, data governance, and ethical AI. It also manages the entire lifecycle of AI assets, from onboarding and offboarding to skill management and performance monitoring. It allows organizations to adopt a risk-based approach to AI technology management and solution implementation, providing the relevant stakeholders with a holistic view of the company’s standpoint, and helping take business informed decisions at all levels. This significantly enhances the traceability and explainability of AI outputs, which is a core requirement for trustworthy and ethical AI, explicitly referred to also in regulations.

- Continuous compliance monitoring: ServiceNow’s policy and compliance module supports regular reviews and audits of AI systems. For example, recurring control tests can be set up that automatically check whether an AI service continues to comply with all defined controls. Results of such tests or audits are documented in the system. If a control target is missed (such as a violation of a prescribed data protection measure by the AI), the system generates a compliance issue for follow-up.

- Integration with risk management: If deviations or new risks are identified, they flow directly into risk management. ServiceNow can automatically create a risk entry or incident that describes the finding (e.g., “AI model XY shows systematic bias compared to group Z”). This risk or compliance issue is further processed in the Risk Management module by assigning tasks to those responsible in order to take countermeasures (e.g., retraining of the model, adaptation of the database or additional control steps). Due to the close integration between the AI Control Tower and the GRC modules, such processes are transparent: in the AI governance workspace, it is possible to see the current risk status of each AI, and in the risk management module, all technical details from the Control Tower are linked.

- Integration with IT service management: If an error or incident is detected in the operation of an AI application, ServiceNow can automatically create a ticket in IT service management (ITSM) (e.g., an incident). This ensures that any disruptions are immediately tracked by the IT/AI operations teams and resolved as part of established incident management processes. In this way, the company bridges the gap between AI governance and operational ITSM processes so that AI disruptions can be addressed in a targeted and timely manner.

With this interaction, ServiceNow ensures that AI systems are operated responsibly even after go-live. This creates a continuous cycle of monitoring, detecting, and reacting; the AI is continuously monitored, findings about rule violations or performance problems are seamlessly transferred to the governance system, and structured corrective measures are taken. This continuous improvement process ensures that AI applications operate reliably within defined risk tolerances in the long term.

Use case 4: managing AI supplier risks

Many organizations source AI technologies or services from third-party providers, whether it’s purchasing pre-trained models, leveraging external AI APIs, or deploying AI consulting services. Despite outsourcing, the company ultimately remains responsible for ensuring that these external AI components also meet regulatory requirements. According to the EU AI Act, companies must keep an eye on the risks in their supply chain, especially if, for example, an external AI provider does not have sufficient controls or its AI is poorly documented. The challenge is to identify such supplier risks at an early stage and to derive suitable measures to counteract them if necessary or not to use an AI service that is too risky.

ServiceNow enables this by using the third-party risk management module in conjunction with the AI governance functions:

- Third party risk management (TPRM): The TPRM module provides a standardized process for assessing and managing supplier risks. For each (AI) supplier, a profile with a risk rating is maintained in ServiceNow. The company can configure customized risk assessments for AI suppliers, for example in the form of questionnaires and assessments to be completed by the vendor. These questionnaires may include AI-specific control questions (e.g., Does the vendor have its own AI risk management? Is training data documented? Are there bias checks? etc.). ServiceNow automates the sending and evaluation of such assessments. The vendor’s answers are validated in the TPRM module and are aggregated in a risk score. This makes it possible to objectively understand which AI supplier potentially poses high risks. In the event of conspicuous answers or missing evidence, the company can define follow-up measures in the TPRM module, such as additional audits at the supplier or contractual obligations.

- Policy and compliance for third-party requirements: The Compliance module can also be used to formalize third-party requirements. For example, an internal policy can state that all third-party AI providers must meet certain minimum standards (such as ethics guidelines, security certificates, transparency reports, etc.). These requirements are recorded as control targets in ServiceNow and assigned to the respective suppliers. The system can then track whether a vendor has provided the required evidence. If a criterion is not met, ServiceNow generates a corresponding compliance issue at the supplier for the TPRM team.

- Integration with AI Control Tower: The AI Control Tower becomes the connecting layer between internal AI governance and third-party management. In the central AI inventory, it can be noted which AI systems come from external providers. For such items, a link can be made to the corresponding third-party risk entry allowing information to flow together. In the AI Control Tower, the AI governance team can immediately see whether, for example, a certain external AI module is subject to restrictions (e.g., “only released for internal test operation” due to open risks). At the same time, updates are automatically visible in the TPRM module if the risk score of an AI asset changes. This cross-module transparency ensures that all AI risks – whether internal or via third-party providers – are managed consistently.

By combining AI Control Tower and vendor risk management, the company achieves end-to-end insights into the state of AI compliance across the value chain. Critical third-party AI providers are identified at an early stage and can be monitored or substituted in a targeted manner. At the same time, trustworthy partners can be integrated, as their compliance evidence is checked and stored centrally. Overall, this approach helps to comply with regulatory requirements and internal security standards along the supply chain without having to forego the integration of innovative AI technologies from outside.

Conclusion and outlook

The EU AI Act presents companies with extensive compliance tasks. At the same time, it offers the opportunity to manage AI systematically and responsibly. With a platform like ServiceNow, the different requirements, from inventory to risk assessment and control implementation to permanent monitoring, can be poured into integrated digital processes. It is important to start early; although most regulations will not take full effect until 2026, companies should effectively use this time. In particular, the development of inventories, processes and control elements takes time and maturity. In addition, individual provisions, such as the bans and initial transparency obligations, will apply as early as the beginning of 2025.

Addendum: The EU’s General-Purpose AI Code of Practice

The European Union’s General-Purpose AI Code of Practice is a voluntary commitment for technology companies that develop or operate generative and general-purpose AI systems. The aim of the code is to establish key principles and practices to ensure the responsible use of AI, particularly with regard to transparency, data protection, accountability, explainability, and human oversight.

This code of practice was created in advance of the EU AI Act coming into force to help companies implement practical and consistent standards for implementing trustworthy AI at an early stage. Signing the code signals that participating companies are publicly committed to these principles and will take appropriate measures to implement them.

ServiceNow is one of the companies that has signed the EU AI Code of Practice. For companies that use ServiceNow, this means that the platform already takes into account key requirements and principles of responsible AI use, providing a robust foundation for easier compliance with future regulatory requirements, particularly those of the EU AI Act.

To learn more about our ServiceNow consulting services, contact us.